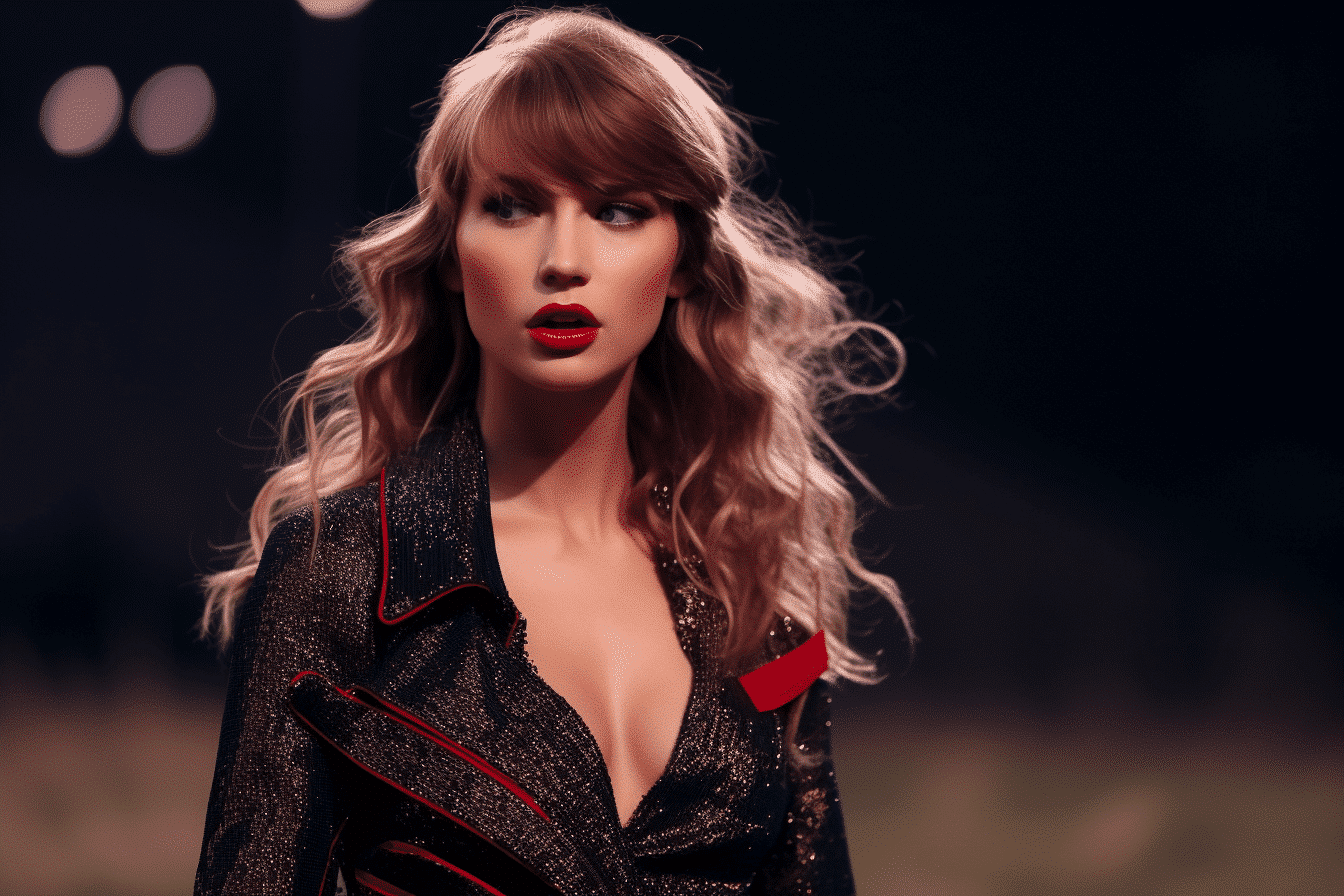

In a startling incident, explicit AI-generated images of global superstar Taylor Swift have gone viral on social media platforms, raising serious concerns about the dark potential of mainstream artificial intelligence technology. The incident sheds light on the ease with which AI can create convincingly realistic, and in this case, damaging images.

These fake images of Taylor Swift, predominantly distributed on the social media platform formerly known as Twitter and now referred to as X, depicted the singer in compromising positions. Shockingly, these images garnered tens of millions of views before being removed from various social platforms, leaving a lasting digital footprint.

Although the images have been removed from mainstream social media sites, the nature of the internet ensures that they will likely continue to circulate on less regulated channels, causing ongoing distress for the artist and her fans.

Taylor Swift’s spokesperson has not commented on the incident, and X, like many other major social media platforms, has policies that explicitly prohibit the sharing of synthetic, manipulated, or out-of-context media that may confuse people and cause harm. However, the company has not responded to requests for comment from CNN regarding this specific incident.

This unsettling episode comes at a time when the United States is heading into a presidential election year, raising concerns about the potential misuse of AI-generated images and videos for disinformation campaigns. Experts in digital investigations warn that the exploitation of generative AI tools to create harmful content targeting public figures is on the rise and spreading rapidly across social media platforms.

Ben Decker, who operates Memetica, a digital investigations agency, points out that social media companies lack effective plans to monitor such content adequately. For instance, X has significantly reduced its content moderation team, relying heavily on automated systems and user reporting, and is currently under investigation in the EU for its content moderation practices.

Similarly, Meta, the parent company of Facebook, has made cuts to its teams that combat disinformation and harassment campaigns on its platforms. These actions raise concerns, particularly as the 2024 elections approach in the US and around the world, where disinformation campaigns can have significant consequences.

The origins of the AI-generated Taylor Swift images remain unclear, although some were found on platforms such as Instagram and Reddit. However, X appears to be the primary platform where these images became a widespread issue.

This incident also coincides with the growing popularity of AI-generation tools like ChatGPT and Dall-E, as well as a broader world of unmoderated AI models available on open-source platforms. Decker argues that this situation highlights the fragmentation in content moderation and platform governance. Without all stakeholders, including AI companies, social media platforms, regulators, and civil society, being on the same page, the proliferation of such content is likely to continue.

Despite the distressing nature of this event, it may serve as a catalyst for addressing the growing concerns surrounding AI-generated imagery. Swift’s dedicated fanbase, known as “Swifties,” expressed their outrage on social media, bringing the issue to the forefront. Just as previous incidents involving Swift led to legislative efforts, this incident might prompt action from legislators and tech companies alike.

The technology that facilitated the creation of these images has also been employed for sharing explicit content without consent, which is presently illegal in nine US states. This increased awareness underscores the pressing requirement for comprehensive measures to tackle the potential negative consequences of AI-generated content.