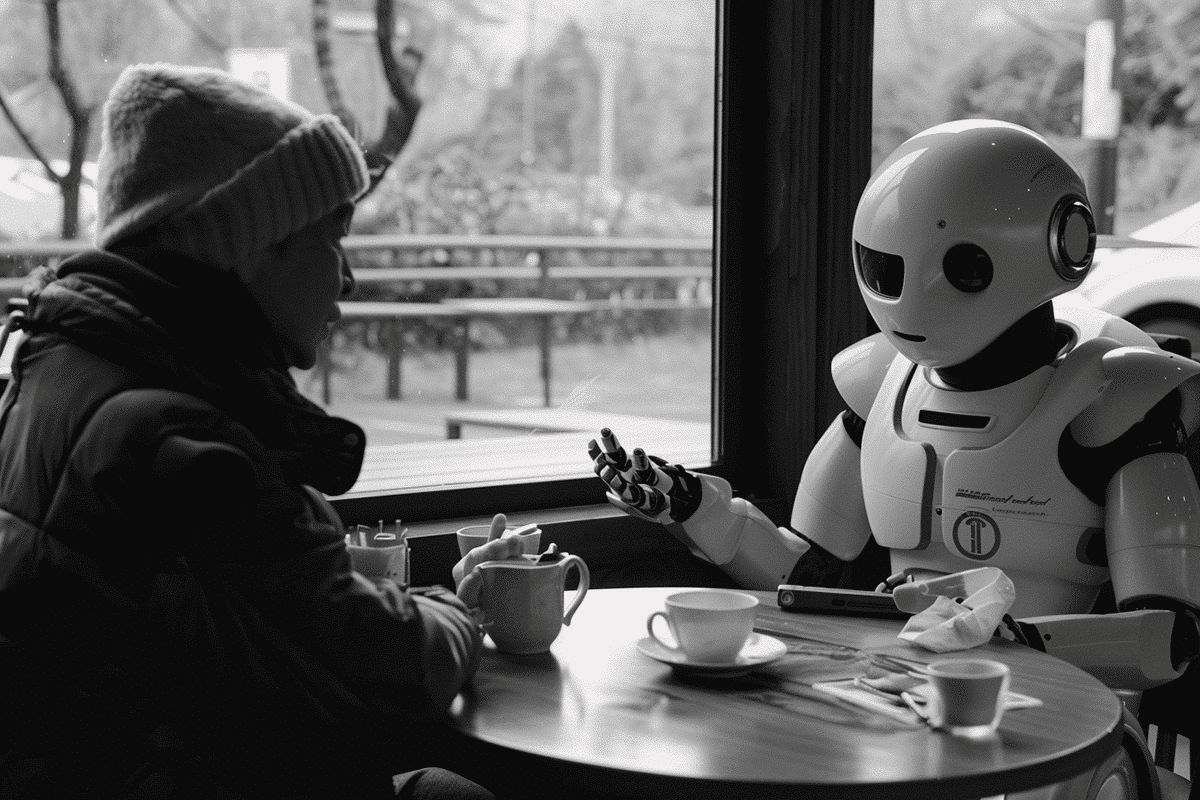

In a groundbreaking development that echoes questions posed by French philosopher René Descartes and later reshaped by mathematician Alan Turing, researchers at the University of California, San Diego, have brought us closer to answering whether machines can convincingly mimic human behavior. A recent study suggests that in a controlled experiment, participants were unable to distinguish the advanced artificial intelligence system ChatGPT-4 from a human after just a five-minute conversation.

The Turing test, proposed by Turing in 1950, seeks to determine a machine’s ability to exhibit intelligent behavior indistinguishable from that of a human. Rather than simply asking if machines can think, Turing’s test focuses on their ability to emulate human responses. After decades of progress in artificial intelligence, a recent study reveals that AI has now advanced to the point where its conversational abilities can deceive the average person into thinking they are talking to a human.

The study involved several AI systems and human participants. The human subjects engaged in brief conversations with either a human or an AI and were then asked to identify their interlocutor as human or machine. The findings revealed that participants guessed correctly only about 50% of the time when interacting with ChatGPT-4, suggesting a significant advancement in AI’s ability to mimic human conversational patterns.

Despite these results, the achievement has sparked a debate about what it truly means for AI to pass the Turing test. Critics of the test argue that convincing a human during a conversation does not necessarily equate to possessing human-like intelligence. Humans have a tendency to anthropomorphize, attributing human characteristics to objects and systems, which can skew perceptions of AI behavior.

To establish a baseline for the experiment, the researchers included ELIZA, an early AI program developed in the 1960s known for its basic conversational capabilities. ELIZA was only able to convince 22% of the participants of its human-like responses, starkly contrasting with the performance of modern AI systems like ChatGPT, which boast more sophisticated programming and learning capabilities. This stark difference underscores the technological advancements that have occurred over the decades in the field of AI.

This breakthrough raises important questions about the future role of AI in society. As AI systems become more adept at mimicking human behavior, they could increasingly be used in client-facing roles, potentially replacing human jobs in some sectors. Moreover, the ability of AI to deceive humans opens up avenues for misuse in areas like fraud and misinformation, where distinguishing between human and machine becomes crucial.

Furthermore, the researchers noted that the experiment’s participants focused less on traditional signs of intelligence, such as knowledge and reasoning, and more on linguistic style and socio-emotional cues. This observation suggests that social intelligence may be becoming a new benchmark for measuring AI’s capabilities in human-like interactions.

As AI continues to evolve, the implications of its integration into daily life become more significant. While the technology offers numerous benefits, including automating mundane tasks and analyzing large sets of data, its potential to mimic humans so closely calls for careful consideration of ethical guidelines and regulations.

The study from UC San Diego marks a significant milestone in artificial intelligence research. It highlights not only the capabilities of current AI technologies but also prompts a critical evaluation of what intelligence means in the age of machines. As AI becomes increasingly indistinguishable from humans in certain contexts, the distinction between human intelligence and artificial intelligence continues to blur, setting the stage for a future where AI may become an integral part of the social fabric.